Binary To HEx

Instantly convert binary to hexadecimal with the fastest, most secure Binary to Hex converter online. 100% free, unlimited use, no sign-up. Try Binary To HEx now!

Share on Social Media:

Binary to Hex Converter Online: The #1 Instant & Free Tool

Whether you're a software developer debugging memory addresses, a network engineer analyzing MAC addresses, or a student learning computer science fundamentals, you constantly encounter the need to translate between number systems. Converting long strings of binary code—the native language of computers—into a more human-readable format is a daily necessity. But doing it manually is not only slow and tedious but also dangerously prone to errors. A single misplaced digit can corrupt data or send you down a rabbit hole of debugging.

While numerous free online tools promise a quick fix, they often come with a catch: slow performance, intrusive ads, data privacy risks, and frustrating usage limits.

It's time for a better solution. Introducing Binary To HEx (https://seomagnate.com/binary-to-hex), the definitive online converter designed for speed, security, and simplicity. This powerful tool eliminates the friction of binary-to-hex conversion, providing instant, accurate results without compromising your privacy.

How to Use the Binary To HEx Converter in 3 Seconds

We believe powerful tools should be incredibly simple to use. Our converter is designed with a clean, intuitive interface that gives you the results you need without any unnecessary steps.

Here’s how easy it is:

Paste Your Binary Code: Copy the binary string you want to convert and paste it directly into the designated input field.

Instant Conversion: That’s it! The moment you paste your code, Binary To HEx processes it in real-time. The hexadecimal equivalent will instantly appear in the output box.

Copy Your Result: Click the "Copy" button to instantly copy the hexadecimal code to your clipboard, ready to be used in your project, homework, or analysis.

It's truly a zero-click conversion process, designed to keep you in your workflow without interruption.

The Binary To HEx Advantage: Why Our Tool is the Best

In a sea of generic converters, Binary To HEx stands out by focusing on what matters most to professionals and students: speed, security, and a seamless user experience.

| Feature | Generic Converters | Binary To HEx (by SEO Magnate) |

|---|---|---|

| Cost & Limits | Often have hidden limits or "premium" versions. | ✅ 100% Free & Unlimited. No throttling, no paywalls. |

| Speed | Slow server-side processing, delayed by ads. | ✅ Instantaneous. Real-time, client-side conversion. |

| Privacy & Security | Your data is sent to their servers, creating a risk. | ✅ Privacy First. All conversion happens in your browser. Nothing is uploaded. |

| User Experience | Cluttered with distracting ads and pop-ups. | ✅ Clean, Ad-Free Interface. A professional, focused workspace. |

| Accessibility | May require sign-up or software installation. | ✅ No Sign-Up, No Installation. Access it instantly from any device. |

| Accuracy | Prone to errors with complex inputs. | ✅ Flawless & Accurate. Built to handle large and complex binary strings reliably. |

Understanding the Fundamentals: What is the Binary System (Base-2)?

To truly appreciate the power of hexadecimal, we first need to understand binary. The binary system is the fundamental language of all digital systems. It's a base-2 number system, which means it only uses two digits: 0 and 1.

Think of it like a light switch:

0 represents "off," "false," or no electrical signal.

1 represents "on," "true," or the presence of an electrical signal.

Every piece of data on your computer—from this text to a complex video game—is stored and processed as a massive sequence of these ones and zeros. Each digit is called a bit (binary digit). Eight bits together form a byte, which can represent 256 different values (28).

For example, the binary number 10110101 is a byte. While computers process this effortlessly, it's difficult for humans to read and interpret quickly.

Decoding Hexadecimal: What is the Hex System (Base-16)?

This is where the hexadecimal system comes in. It's a base-16 number system, meaning it uses sixteen unique symbols to represent values. Since we only have 10 numeric digits (0-9), it borrows six letters from the alphabet.

The hexadecimal digits are: 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, A, B, C, D, E, F.

A represents the decimal value 10.

B represents the decimal value 11.

...and F represents the decimal value 15.

The primary superpower of hexadecimal is its perfect relationship with binary. One hexadecimal digit can represent exactly four binary digits (a nibble). This allows for a much more compact and human-readable representation of binary data.

For example, the 8-bit binary number 10110101 can be represented by just two hexadecimal digits: B5. It's vastly easier to read, write, and remember B5 than 10110101.

How to Convert Binary to Hex Manually (The Ultimate Guide)

Using our Binary To HEx tool is the fastest method, but understanding the manual process is crucial for any aspiring programmer or computer scientist. The conversion is surprisingly straightforward once you know the steps.

Step 1: Group the Binary String into Nibbles (4-Bit Groups)

Starting from the right, divide your binary string into groups of four digits. Each group of four is called a "nibble."

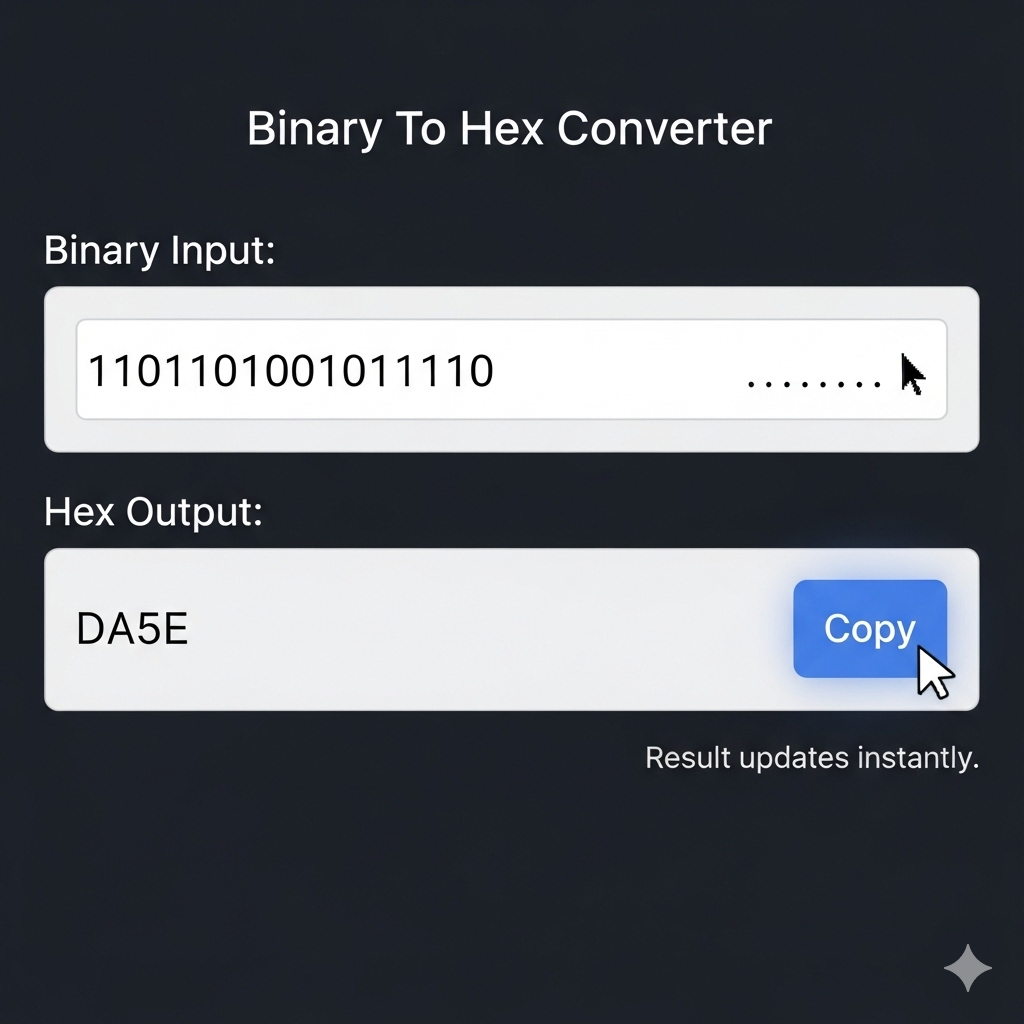

Let's take the binary string: 1101101001011110

Grouping it gives us: 1101 1010 0101 1110

Step 2: Pad with Leading Zeros (If Necessary)

If the leftmost group has fewer than four digits, add leading zeros to the left until it has four.

Example binary string: 1011101

Grouping from the right: 101 1101

The leftmost group (101) has only three digits. We pad it with one zero: 0101 1101

Step 3: Convert Each Nibble Using the Conversion Table

Now, convert each 4-bit group into its corresponding hexadecimal digit using this lookup table. It's highly recommended to memorize this table.

| Binary | Hexadecimal |

|---|---|

0000 | 0 |

0001 | 1 |

0010 | 2 |

0011 | 3 |

0100 | 4 |

0101 | 5 |

0110 | 6 |

0111 | 7 |

1000 | 8 |

1001 | 9 |

1010 | A |

1011 | B |

1100 | C |

1101 | D |

1110 | E |

1111 | F |

Step 4: Combine the Hex Digits

Combine the resulting hexadecimal digits in the same order to get your final answer.

Worked Example:

Let's convert 1101101001011110 again.

Group: 1101 1010 0101 1110

Convert each nibble:

1101 = D

1010 = A

0101 = 5

1110 = E

Combine: DA5E

So, the binary value 1101101001011110 is $DA5E$ in hexadecimal. (The $ or 0x prefix is often used to denote a hex number).

Of course, for a fast and error-free result, you can simply paste this into the Binary To HEx tool.

Real-World Applications: Where is Binary to Hex Conversion Used?

This conversion is not just an academic exercise; it's a fundamental operation used across many fields of technology.

Web Development (HTML/CSS Color Codes): Colors on the web are often specified using the hex format #RRGGBB. Each pair of hex digits represents the intensity of Red, Green, and Blue, from 00 (0) to FF (255). For example, #FFFFFF is pure white, and #FF0000 is pure red.

Networking (MAC Addresses): The Media Access Control (MAC) address of your network card is a unique 48-bit identifier typically written as six groups of two hexadecimal digits (e.g., 00:1A:C2:7B:60:1D).

Programming & Debugging: When debugging software, memory dumps and error codes are almost always displayed in hexadecimal. It's far more practical for a developer to read a memory address like 0x7FFF5FBFFC58 than its 64-bit binary equivalent.

Data Encoding: Character sets like ASCII and Unicode use numerical codes to represent every character and symbol. These codes are often represented in hexadecimal for documentation and analysis.

Frequently Asked Questions (FAQ)

Here are answers to some of the most common questions about binary-to-hexadecimal conversion.

1. How does a binary to hex converter work? A binary to hex converter works by implementing the manual conversion logic automatically. It takes a binary string as input, groups the digits into sets of four (nibbles), and then maps each nibble to its corresponding hexadecimal character (0-F) based on a predefined lookup table. Our tool, Binary To HEx, does this instantly using optimized client-side JavaScript.

2. What is the easiest way to convert binary to hex? The absolute easiest and most reliable way is to use a trusted online tool like Binary To HEx (https://seomagnate.com/binary-to-hex). It eliminates the risk of human error, handles padding automatically, and provides instant results that you can copy with a single click.

3. Why is hexadecimal used in programming instead of binary? Hexadecimal is used because it acts as a compact, human-readable shorthand for binary. Since one hex digit represents four binary digits, a long binary string can be shortened by a factor of four, making it significantly easier to read, write, and debug without losing any precision.

4. How do you convert 1111 in binary to hex? The binary string 1111 is a single 4-bit nibble. Looking at the conversion table, 1111 directly corresponds to the hexadecimal digit F.

5. Is the Binary To HEx tool free to use for commercial projects? Yes, absolutely. The Binary To HEx converter is 100% free for all purposes, including personal, educational, and commercial projects. There are no usage limits or licensing fees.

6. Is my data safe when using this online converter? Yes. This is a crucial feature of our tool. Binary To HEx performs all calculations directly within your web browser (client-side). Your binary data is never uploaded to our servers, ensuring complete privacy and security.

7. Can this tool handle very large binary numbers? Yes. Our converter is built to handle very large binary strings efficiently. Whether you're working with a single byte or a massive data stream, the tool will provide accurate results without performance issues.

8. What is the difference between binary, decimal, and hexadecimal? They are different number systems with different bases. Binary is base-2 (digits 0-1), the language of computers. Decimal is base-10 (digits 0-9), the system we use in everyday life. Hexadecimal is base-16 (digits 0-9 and A-F), used as a compact representation of binary.

Get Started with the Best Binary to Hex Converter Now

Stop wasting time with manual conversions or settling for slow, ad-filled tools that don't respect your privacy. For developers, students, and tech enthusiasts who demand efficiency and security, there is only one clear choice.

Binary To HEx by SEO Magnate offers an unparalleled combination of speed, reliability, and user-centric design. It's the professional-grade tool you need, completely free and instantly accessible.

Experience the difference a superior tool can make in your workflow.

➡️ Try Binary To HEx free now → https://seomagnate.com/binary-to-hex