Binary To Text

Convert binary code to readable text instantly with our 100% free online tool. Learn how it works, explore encodings, and decode any message. No registration needed.

Share on Social Media:

Binary to Text Converter Online Free: Your Ultimate Tool to Decode the Language of Computers

Have you ever stared at a long string of 1s and 0s and wondered what secret message it holds? Whether you're a student learning computer science, a developer debugging code, or just someone who saw "01001000 01100101 01101100 01101100 01101111" in a movie and got curious, you need a fast, reliable, and completely free way to convert that binary code into plain text.

Look no further. Our binary to text converter online free tool is the most powerful and user-friendly decoder on the web. Paste your binary code, click a button, and watch it transform into readable text in milliseconds. But we don't stop at just being a tool. This guide is your complete masterclass on everything related to binary-to-text conversion. We'll dive deep into the history of ASCII, explain the difference between UTF-8 and UTF-16, provide step-by-step examples, troubleshoot common errors, and even show you how to do it manually. By the end, you won't just use a converter—you'll understand it.

What is Binary? The Foundational Language of All Digital Devices

Before we learn how to convert binary to text, we must understand what binary is. At its core, binary is the most fundamental language of computers and digital electronics. It's a base-2 numeral system that uses only two digits: 0 and 1.

Think of it like a light switch: it's either OFF (0) or ON (1). Every piece of data your computer processes—whether it's a high-definition video, a complex spreadsheet, or this very webpage—is ultimately stored and transmitted as a vast sequence of these on and off signals. This simplicity makes it perfect for the electronic circuits inside computers, where a low voltage can represent a 0 and a high voltage can represent a 1.

While humans use the decimal (base-10) system (0-9), computers rely on binary (base-2) because it's incredibly reliable and easy to implement with physical components. A single binary digit is called a bit. For practical data representation, bits are grouped together. The most common grouping is a byte, which consists of 8 bits. One byte can represent 2^8 = 256 different values, which is sufficient to encode a single character of text, a small number, or a specific instruction.

The History of Text Encoding: From Telegraphs to Unicode

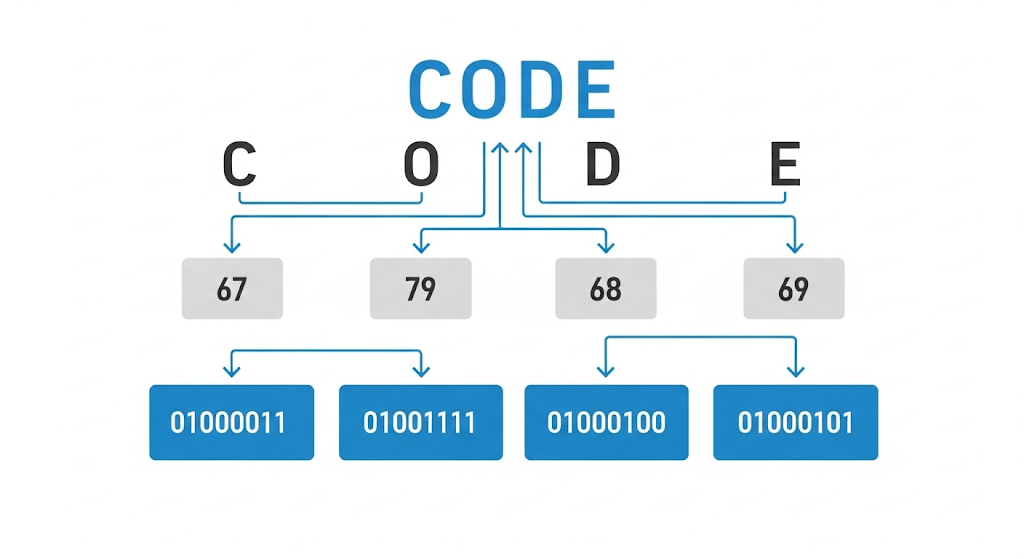

Converting binary to text isn't just about math; it's about agreed-upon standards. How does a computer know that the binary sequence 01000001 should be displayed as the letter "A" and not "Z" or the number "65"? The answer lies in character encoding.

The Birth of ASCII: A Standard for the Digital Age

In the early days of computing, different manufacturers used their own proprietary codes, leading to chaos. To solve this, the American Standard Code for Information Interchange (ASCII) was developed in the 1960s. ASCII assigned a unique 7-bit binary number (later extended to 8 bits) to each character on a standard English keyboard.

This meant that 01000001 would always mean "A", 01100001 would always mean "a", and 00100000 would always mean a space. This standardization was revolutionary, enabling computers from different companies to communicate and share text data reliably. The first 128 characters (0-127) of ASCII are still universally recognized today and form the foundation of most modern encodings.

The Limitations of ASCII and the Rise of Unicode

ASCII was perfect for English, but it had a critical flaw: it could only represent 128 (or 256 with the 8th bit) characters. This left out the vast majority of the world's languages, which use accents, non-Latin alphabets (like Cyrillic, Arabic, or Greek), and thousands of characters (like Chinese Hanzi).

To address this, Unicode was created. Unicode is not a single encoding but a universal character set. It aims to assign a unique number, called a code point, to every character in every human language, past and present, including emojis! For example, the letter "A" is U+0041, the Chinese character "人" (person) is U+4EBA, and the smiling face emoji 😊 is U+1F60A.

However, Unicode doesn't specify how these code points are stored as binary. That's where encoding forms like UTF-8, UTF-16, and UTF-32 come in. They are the rules that translate Unicode code points into actual binary sequences.

Binary to Text vs. Binary-to-Text Encoding: Clearing the Critical Confusion

One of the biggest sources of confusion in this field is the difference between two concepts that sound almost identical:

Binary to Text Conversion (Our Focus): This is what you're here for. It's the process of taking a binary representation of text (e.g., 01001000 01100101 01101100 01101100 01101111) and converting it back into human-readable characters ("Hello"). This relies on character encodings like ASCII or UTF-8. The input is binary that represents text.

Binary-to-Text Encoding (A Different Problem): This refers to a family of algorithms like Base64, Uuencode, or ASCII armor. Their purpose is to safely transmit non-text binary data (like an image file, a PDF, or a program) over systems that are designed only for text (like old email protocols or HTML). These algorithms convert the raw binary data into a string of ASCII characters (letters, numbers, +, /, =) so it won't be corrupted. The input is any binary data, not necessarily text.

Why is this distinction crucial?

- Wrong Tool: If you try to use a Base64 decoder on a string of binary meant for ASCII, you'll get gibberish.

- Wrong Expectation: If you have a Base64-encoded image and expect our tool to show you "Hello," it won't work.

Our binary to text converter online free is designed for #1. It decodes binary strings that are known to represent human-readable text using common character encodings.

How Our Binary to Text Converter Works: A Step-by-Step Breakdown

Our tool is designed for instant results, but understanding the process behind it adds a layer of mastery. Here's exactly what happens when you click "Convert":

Step 1: Input Parsing and Validation

The tool first takes your input string (e.g., 01000001 01101110). It scans the text, removing any non-binary characters (like letters or symbols) and splitting the string into manageable chunks. The most common delimiter is a space, but our tool is smart enough to handle inputs without spaces by automatically grouping bits into 8-bit bytes.

Step 2: Chunking into Bytes

The core of text encoding is the byte. The tool groups the binary digits into sets of 8. For our example:

0100000101101110

Each of these 8-bit groups is called a byte.

Step 3: Binary to Decimal Conversion

Each byte is then converted from binary (base-2) to its decimal (base-10) equivalent. This is done by calculating the sum of powers of 2 for each bit that is a "1".

For 01000001:

- Bit 7 (leftmost): 0 * 2^7 = 0 * 128 = 0

- Bit 6: 1 * 2^6 = 1 * 64 = 64

- Bit 5: 0 * 2^5 = 0 * 32 = 0

- Bit 4: 0 * 2^4 = 0 * 16 = 0

- Bit 3: 0 * 2^3 = 0 * 8 = 0

- Bit 2: 0 * 2^2 = 0 * 4 = 0

- Bit 1: 0 * 2^1 = 0 * 2 = 0

- Bit 0 (rightmost): 1 * 2^0 = 1 * 1 = 1

- Total: 64 + 1 = 65

For 01101110:

- (164) + (132) + (016) + (18) + (14) + (12) + (0*1) = 64 + 32 + 8 + 4 + 2 = 110

Step 4: Character Mapping via Encoding

Now that we have decimal numbers (65 and 110), the tool uses the selected character encoding (e.g., ASCII, UTF-8) as a lookup table.

- In ASCII, the number 65 corresponds to the uppercase letter "A".

- The number 110 corresponds to the lowercase letter "n".

Step 5: Output Assembly

The tool concatenates all the decoded characters in the order they were processed and displays the final text: "An".

This entire process happens in a fraction of a second, powered by efficient JavaScript running directly in your browser.

A Complete Guide to Character Encodings: Choosing the Right One

Our tool supports multiple encodings because not all text is created equal. Choosing the correct encoding is vital for accurate conversion. Here's a breakdown of the most common ones:

| Encoding | Description | Best ForKey Feature | |

|---|---|---|---|

| ASCII | The original 7-bit standard. Uses 1 byte per character. | Simple English text with no accents. | Fast, universal, but limited to 128 characters. |

| UTF-8 | A variable-length encoding for Unicode. Uses 1-4 bytes per character. Backward compatible with ASCII. | Recommended for most users.Web pages, general text, emails. | Efficient for English, can represent any Unicode character. |

| UTF-16 | Another Unicode encoding, using 2 or 4 bytes per character. | Text with many characters outside the Basic Multilingual Plane (e.g., some emojis, ancient scripts). | More consistent byte usage than UTF-8 for non-Latin scripts. |

| UTF-16LE | UTF-16 withLittle Endianbyte order. | Files from Windows systems or specific applications. | The least significant byte is stored first. |

| UTF-16BE | UTF-16 withBig Endianbyte order. | Files from older Mac systems or network protocols. | The most significant byte is stored first. |

| Windows-1252 | A superset of Latin-1, used by default in older Windows systems. | Legacy documents, Western European text with smart quotes. | Includes useful characters like curly quotes and the Euro sign. |

How to Choose:

- When in doubt, use UTF-8. It's the modern standard for the web and handles most scenarios perfectly.

- If your output has strange symbols or question marks (

???), you likely have the wrong encoding selected. Try switching to UTF-8 or a language-specific encoding (like Windows-1252 for Western European text). - For pure, simple English messages, ASCII will work fine.

Mastering the Tool: Features & How to Use It

Our binary to text converter online free is packed with features to make your decoding experience seamless. Here's how to get the most out of it:

Using the Tool: A Simple 3-Step Process

Input Your Binary Code:

- Paste Text: Simply copy your binary string (e.g.,

01001000 01100101 01101100 01101100 01101111) and paste it into the large input box. - Upload a File: Click the "Upload File" button to select a

.txtor.binfile from your device. This is perfect for large blocks of binary data.

Select Your Encoding: * Use the dropdown menu to choose the correct character encoding. As mentioned, UTF-8 is the safest bet for most users.

- Convert and Download:

- Click the "Convert" button. The decoded text will instantly appear in the output box.

- Download the Result: Click the download icon (⬇️) below the output box to save the decoded text as a

.txtfile on your device.

Advanced Features for Power Users

- Live Mode: Enable "Live Mode" to see the text update in real-time as you type or paste binary. This is invaluable for quickly testing or debugging.

- Automatic Delimiter Detection: Our tool intelligently handles inputs with spaces, tabs, new lines, or no delimiters at all. You don't need to format your binary perfectly.

- Error Highlighting: If a binary sequence is invalid (e.g., contains a '2' or is not a multiple of 8 bits), the tool will highlight the problematic section.

Step-by-Step Conversion Examples

Seeing is believing. Let's walk through several examples to solidify your understanding.

Example 1: The Classic "Hello"

- Binary Input:

01001000 01100101 01101100 01101100 01101111 - Process:

01001000= 72 → 'H' (ASCII)01100101= 101 → 'e' (ASCII)01101100= 108 → 'l' (ASCII)01101100= 108 → 'l' (ASCII)01101111= 111 → 'o' (ASCII)

- Output: Hello

Example 2: Text with Punctuation and Spaces

- Binary Input:

01000001 01110010 01100101 01101110 00100111 01110100 00100000 01100010 01101111 01101110 01100110 01101001 01110010 01100101 01110011 00100000 01100110 01110101 01101110 00100001 00111111 - Note:

00100000= 32 → Space,00100111= 39 → Apostrophe ('),00111111= 63 → Question Mark (?) - Output: Aren't bonfires fun!?

Example 3: Non-English Text Using UTF-8

UTF-8 can handle characters from other languages. The word "café" includes an accented 'é'.

- 'c':

01100011(99) - 'a':

01100001(97) - 'f':

01100110(102) - 'é': This character requires 2 bytes in UTF-8:

11000011 10101001- The first byte

11000011tells the decoder that this is a 2-byte character. - The second byte

10101001provides the rest of the code. - Together, they map to the Unicode code point for 'é'.

- The first byte

- Binary Input:

01100011 01100001 01100110 11000011 10101001 - Output: café

Example 4: Troubleshooting Garbled Output

- Scenario: You input

01001000 01100101 01101100 01101100 01101111but getHellowith strange symbols. - Diagnosis: The most common cause is an incorrect encoding. You might have selected "UTF-16" instead of "UTF-8" or "ASCII".

- Solution: Switch the encoding to UTF-8. The correct text should appear instantly.

Advanced Topics: Going Beyond the Tool

How to Convert Binary to Text Manually

While our tool does the work for you, knowing how to do it by hand is a valuable skill. Here's how:

- Group into Bytes: Split your binary string into groups of 8 bits. Add leading zeros if a group is shorter.

- Convert to Decimal: For each byte, calculate its decimal value.

- Use an ASCII Table: Look up the decimal number in an ASCII table to find the corresponding character.

- Assemble the Message: String all the characters together.

Manual Example: Convert 01000001.

- It's already 8 bits.

- Calculate: (0128) + (164) + (032) + (016) + (08) + (04) + (02) + (11) = 64 + 1 = 65.

- Look up 65 in the ASCII table: It's "A".

The Mathematics of Binary: Powers of 2

Understanding binary is about understanding place value, just like in the decimal system, but with powers of 2 instead of 10.

| Bit Position (from right) | 7 | 6 | 5 | 4 | 3 | 2 | 1 | 0 |

|---|---|---|---|---|---|---|---|---|

| Power of 2 | 2^7 | 2^6 | 2^5 | 2^4 | 2^3 | 2^2 | 2^1 | 2^0 |

| Value | 128 | 64 | 32 | 16 | 8 | 4 | 2 | 1 |

To convert, multiply each bit by its place value and sum the results. Only the bits that are "1" contribute to the sum.

Big Endian vs. Little Endian: The Byte Order Debate

When a character requires more than one byte (like in UTF-16), the order in which those bytes are stored matters.

- Big Endian (BE): The "big end" (most significant byte) comes first. Think of it like writing a number: 123, the '1' (hundreds) comes first.

- Little Endian (LE): The "little end" (least significant byte) comes first. This is common in Intel-based processors.

For example, the Unicode code point for 'A' (U+0041) in UTF-16:

- Big Endian:

00 41(00 first, then

- Little Endian:

41 00(41 first, then 00)

Our tool allows you to select UTF-16BE or UTF-16LE to handle files from different systems correctly.

Real-World Applications & Fun Facts

Binary-to-text conversion isn't just a theoretical exercise. It has practical uses:

- Debugging: Developers often see binary or hexadecimal dumps in logs. Converting them to text can reveal error messages or data.

- Data Transmission: Understanding encoding is crucial for ensuring text is transmitted correctly over networks.

- Cryptography & Steganography: Hidden messages are sometimes embedded in binary data.

- Legacy Systems: Interfacing with older hardware or software may require manual binary manipulation.

- Education: It's a fundamental concept in computer science and digital literacy.

Fun Fact: The word "binary" comes from the Latin word "binarius," meaning "consisting of two."

Understanding the Basics of Binary Code

As someone deeply entrenched in digital communications and data processing, I can attest that binary-to-text conversion is a fundamental process that involves decoding bits into readable characters. This conversion is essential because computers and electronic devices process and store information in binary form - a series of 0s and 1s. When we encode plaintext into binary code, we use bits, the most minor data units in computing, and often represent this data in hexadecimal form to ease human understanding and data manipulation.

The translation from human-readable text to binary and vice versa is not just a technical process; it is the crux of data representation in the digital realm. Without this ability to convert binary code back into text, the gap between human communication and machine processing would be insurmountable. Encoding plaintext into binary code uses bits, and we often use hexadecimal for data representation to streamline the process and make the binary data more accessible for various programming and debugging tasks.

The Process of Decoding Binary to Text

Delving into the binary-to-text conversion process, it's clear that this method deciphers bits into readable characters, which is the reverse of the encoding process. Decoding binary code is not just about translating it into text; it also often involves encoding plaintext into hexadecimal for clarity. This step is crucial because hexadecimal serves as a more human-readable representation of binary code, which can be lengthy and prone to error if dealt with directly.

When we decode binary, we translate a language that computers understand into one humans can read and interpret. This meticulous process requires precision and a good grasp of how binary numbers correspond to text characters. It is a fundamental computer science skill integral to various IT and communication fields.

Bits and Bytes: The Building Blocks of Binary

To fully grasp binary-to-text encoding, one must understand that bits are the foundation of every character we aim to represent. A bit is the most basic data unit in computing; eight bits form a byte. Within these bytes, the ASCII or Unicode values representing characters are stored.

Decoding binary code, then, is the conversion of these bits to plaintext characters. It's a simple process that requires a comprehensive understanding of how different combinations of bits correspond to other characters. This knowledge is essential for anyone working in fields that require translating binary data to human-readable text.

Binary to Text: The Role of Encoding Standards

The accuracy of binary-to-text conversion heavily relies on encoding standards. These standards, such as ASCII or Unicode, provide a consistent way to convert binary values into characters. Without these standards, decoding bits to characters would be ambiguous and prone to errors.

Understanding these encoding standards is crucial for anyone involved in data processing or software development. It ensures that the binary code is correctly interpreted as the intended characters, maintaining the integrity and meaning of the original text.

ASCII Encoding: Interpreting Text from Binary

ASCII encoding is one of the most widely used methods for interpreting text from binary. Each text character is assigned a specific binary number in this encoding scheme. When we convert binary to text, we decipher these bits into the corresponding ASCII characters. Encoding translates plaintext into binary code, and decoding reverts this process, enabling us to recover the original plaintext from its binary representation.

This conversion is essential for text communication between different computer systems and devices, as ASCII is a standard that is recognized and implemented universally in the computing world.

Unicode and UTF-8: Expanding the Binary Alphabet

The need for a more extensive character set becomes evident as our digital world becomes increasingly diverse. Unicode and UTF-8 are encoding standards that expand the binary alphabet to include a more comprehensive array of characters, including those from various languages and symbols not covered by ASCII.

Binary-to-text encoding that utilizes these standards converts bits to readable characters, taking into account the extended range of characters that Unicode supports. Decoding involves translating the binary code to plaintext via hexadecimal, which is critical in ensuring the text is accurately represented, regardless of the language or symbols used.

Binary to Text Conversion Tools and Software

In binary-to-text conversion, numerous tools and software are available to streamline decoding bits into plaintext characters. These conversion applications interpret binary code, transforming bits into readable hexadecimal encoding. They are designed to automate the conversion process, reducing the possibility of human error and vastly increasing efficiency.

For anyone working with binary data, these tools are indispensable. They provide a quick and accurate means to convert binary code into text, which is essential for data analysis, software development, and digital communications.

Step-by-Step Guide to Manual Binary Decoding

Understanding binary-to-text encoding is essential for accurate decoding. The manual decoding process involves a step-by-step method where one converts from binary code to plaintext. This method often uses hexadecimal systems in the encoding process to simplify the binary representation and make the conversion more manageable.

The manual decoding process requires a careful approach to ensure that each binary sequence is correctly translated into the corresponding text character. While not as fast as using conversion tools, it is a skill that provides a deep understanding of how binary encoding works.

Hexadecimal: A Bridge Between Binary and Text

Hexadecimal plays a pivotal role in simplifying binary-to-text conversion by grouping bits efficiently. It serves as a bridge between the complex binary code and the text we seek to understand. Using hexadecimal, encoding and decoding processes represent binary code as more compact and human-readable characters.

Hexadecimal notation reduces the length of binary strings, making it easier to work with and less prone to error during the conversion process. It is an essential concept for anyone involved in data processing and computer programming.

Character Encoding and Its Importance in Text Conversion

Character encoding is the backbone of binary-to-text conversion. It is the process that transforms plaintext into binary code and vice versa. This encoding ensures that text is legible and meaningful when converted from binary.

The importance of character encoding cannot be overstated. It allows for the accurate decoding of bits to characters, ensuring that the information retains its intended meaning and can be understood by humans. Without proper encoding, the data we work with would be meaningless.

Common Pitfalls in Binary-to-Text Decoding

Common pitfalls in binary-to-text decoding can lead to incorrect plaintext characters. Misinterpreting binary code during conversion is a frequent error that can result in garbled text. Additionally, an inadequate understanding of encoding schemes can significantly affect the accuracy of binary-to-text decoding.

To avoid these pitfalls, it is crucial to have a solid grasp of the encoding standards and approach the conversion process with meticulous attention to detail. Ensuring that the binary code is interpreted correctly is paramount for the integrity of the decoded text.

Optimizing Binary Code for Efficient Text Representation

Efficient encoding from binary to text reduces the number of bits required and enhances data processing speed. Decoding binary code to characters with the aid of hexadecimal plays a significant role in ensuring the accuracy of plaintext conversion.

Optimizing binary code for text representation involves using the most suitable encoding standard and minimizing the binary data size without losing the text's fidelity. This optimization is essential in applications where speed and storage efficiency are critical.

The Significance of Bit Patterns in Encoding Characters

In the world of binary-to-text conversion, the significance of bit patterns cannot be understated. These patterns are essential for decoding bits to characters, as each unique pattern corresponds to a specific character in the encoding scheme.

Understanding the relationship between bit patterns and the characters they represent is fundamental to converting binary code into text. It is the foundation upon which encoding characters in binary code is built, underpinning the conversion from hexadecimal to plaintext.

How Computers Use Binary to Store and Display Text

Computers use binary to store and display text by converting the text into binary code and then decoding it via hexadecimal for display purposes. This conversion process transforms the bits, which are the language of computers, into readable characters that humans can understand.

The ability to convert binary to text and vice versa is a fundamental aspect of computing. It allows for storing, processing, and displaying textual information in a form that is both efficient for computers and accessible for human users.

The History and Evolution of Binary Encoding

The history and evolution of binary encoding have been marked by the continuous transformation of bits into readable characters. From the early days of computing, binary code has been the standard for representing data electronically.

Over time, the methods for decoding binary code have evolved, incorporating hexadecimal for simplicity and efficiency. The development of various encoding standards like ASCII and Unicode has expanded the range of characters that can be represented, reflecting the growing complexity of human communication and the need for more diverse character sets.

Understanding Endianness in Binary Data

Binary-to-text encoding requires understanding how bits are converted in terms of endianness. Endianness refers to the order in which bytes are arranged within a larger data structure, such as a word or a long word. It can affect how binary data is interpreted and thus affect the decoding process.

Decoding binary code to characters involves interpreting the hexadecimal values in the correct order to represent the plaintext accurately+. Understanding endianness is crucial for anyone working with binary data, as it ensures that the data is interpreted consistently across different systems.

Troubleshooting Binary-to-Text Conversion Errors

When troubleshooting binary-to-text conversion errors, ensuring that the proper encoding method matches the binary code to the intended characters is vital. Additionally, verifying the integrity of the bits sequence during conversion is crucial to avoid errors in the hexadecimal representation.

These steps are essential for diagnosing and correcting issues that may arise during the conversion process. By systematically addressing potential errors, one can ensure the accuracy and reliability of the decoded text.

Security Considerations in Text Encoding and Decoding

In the realm of text encoding and decoding, security considerations are paramount. Accurate binary-to-text conversion is necessary to preserve data integrity, and any errors in the process can lead to vulnerabilities in data transmission.

When decoding bits to characters, it is essential to consider the potential security risks that may arise, especially when transmitting plaintext over networks. Ensuring that the encoding and decoding processes are secure can help prevent unauthorized access to sensitive information.

The Impact of Character Sets on Binary Conversion

Plaintext encoding into binary code must account for the specific character set to ensure accurate binary-to-text conversion. Different character sets have different binary representations, and decoding hexadecimal characters from bits requires understanding these sets.

The impact of character sets on binary conversion is significant, as it determines how text is represented and interpreted in binary form. Familiarity with the character set in use is essential for anyone involved in text encoding and decoding.

Automating Binary to Text Conversions with Scripts

Streamlining binary-to-text encoding processes through script automation effectively enhances the accuracy and efficiency of decoding bits to characters. Scripts can be programmed to handle the conversion process, reducing the potential for human error and speeding up the translation of binary data to text.

Scripts in automating conversions is becoming increasingly common in data processing and software development. It allows for consistent and reliable decoding of binary code, which is essential in many technical fields.

Exploring Different Binary-to-Text Encoding Schemes

The exploration of different binary-to-text encoding schemes reveals various methods for facilitating the conversion of bits to characters. Each encoding scheme has its own rules for translating binary code and may include different sets of characters.

Decoding binary code involves translating these schemes' hexadecimal values into plaintext. Understanding the nuances of each encoding scheme is essential for anyone seeking to accurately convert binary data into text.

The Relationship Between Binary Code and Machine Language

The relationship between binary code and machine language is intrinsic, as binary-to-text conversion translates bits into readable characters. Machine language, the native language of a computer's processor, consists of binary instructions dictating the processor's operations.

Decoding binary code involves encoding plaintext into hexadecimal for interpretation by humans while the computer itself processes the binary code directly. Understanding this relationship is essential for programmers and computer scientists, as it underpins the functionality of all computer operations.

Data Compression Techniques in Binary Encoding

In binary encoding, data compression techniques are employed to optimize storage by reducing the size of the binary code. Encoding plaintext into binary code with these techniques allows for more efficient use of storage space and faster data transmission.

Decoding binary to text involves the conversion of these compressed bits to characters. Compression techniques are an essential aspect of binary encoding, as they enhance data storage and communication efficiency.

The Future of Binary Encoding in an Increasingly Textual World

As we progress into an increasingly textual world, the future of binary encoding will continue to bridge bits to characters for readability. Decoding hexadecimal binary code to plaintext will remain fundamental, simplifying data interpretation and use.

The ongoing development of encoding standards and conversion tools will play a critical role in meeting the demands of a world where text is a primary medium for communication and information exchange.

Educational Resources for Learning Binary-to-Text Conversion

A wealth of educational resources is available to enhance their understanding of binary-to-text conversion. These resources provide insights into how binary code represents characters through encoding and the process of decoding bits into hexadecimal.

Learning binary-to-text conversion is a valuable skill that has applications in many areas of technology and computing. It is an essential component of the digital literacy required in today's tech-driven world.

Frequently Asked Questions (FAQs)

Here are answers to the most common questions users have about binary to text conversion.

1. How does a binary to text converter work? It works by splitting the binary input into 8-bit chunks (bytes), converting each byte from binary to its decimal equivalent, and then using a character encoding table (like ASCII or UTF-8) to map that decimal number to a specific character.

2. Is my data safe? Is this tool secure? Yes, your data is completely safe. Our binary to text converter online free tool runs entirely in your web browser using JavaScript. Your binary data never leaves your device; it is not sent to any server. This ensures maximum privacy and security.

3. Can I convert binary to text without spaces? Absolutely. Our tool is designed to handle binary strings with or without spaces, tabs, or other delimiters. It automatically groups the bits into 8-bit bytes.

4. Why is my output showing question marks (?) or garbled symbols? This almost always means you have selected the wrong character encoding. Try switching to UTF-8. If the text contains special characters or is in a non-English language, you might need a specific encoding like Windows-1252 or UTF-16.

5. What is the difference between ASCII and UTF-8? ASCII is a 7-bit (or 8-bit) encoding that can only represent 128 (or 256) characters, primarily English letters, numbers, and symbols. UTF-8 is a variable-length encoding for the Unicode standard. It can represent over a million characters from all languages and symbols, including emojis. UTF-8 is backward compatible with ASCII.

6. Can this tool convert binary images or files back to their original format? No. Our tool is specifically designed to decode binary that represents text. If you have a binary file (like an image or a program), it needs to be interpreted by the appropriate software (e.g., an image viewer). Converting such a file with this tool would result in meaningless text.

7. How do I convert text back to binary? You can use our companion tool, the Text to Binary Converter , which performs the reverse operation.

8. Is there a limit to how much binary I can convert? There is no strict limit imposed by the tool. However, extremely large amounts of data might slow down your browser. For very large files, using the file upload feature is recommended.

9. How accurate is this converter? Our converter is 100% accurate. It uses standard, well-established algorithms for binary-to-decimal conversion and character mapping based on official encoding standards.

10. Can I use this on my phone? Yes! Our tool is fully responsive and works perfectly on smartphones and tablets. You can paste binary from a note or upload a file directly from your mobile device.

Conclusion: Master the Language of Computers

You now hold the key to unlocking the digital world's most fundamental language. Our binary to text converter online free tool is your instant gateway to transforming inscrutable 1s and 0s into meaningful text. Whether you're solving a puzzle, debugging code, or simply satisfying your curiosity, you have the power at your fingertips.

But more importantly, you now understand the why behind the conversion. You know the history of ASCII, the power of Unicode, and the critical difference between text encoding and binary-to-text encoding. This deeper knowledge transforms you from a casual user into a knowledgeable expert.

Ready to decode your first message? Paste your binary code above and click "Convert." The secret's out!