Binary To ASCII

Instantly translate binary code to readable ASCII text with our free online converter. Our comprehensive guide explains how it works. Fast, secure, and unlimited.

Share on Social Media:

The Ultimate Binary to ASCII Converter Online [Free & Instant Guide]

Ever stared at a long string of 0s and 1s and felt like you were trying to read a secret code? In a way, you are. That binary code is the fundamental language of computers, and deciphering it is key to understanding the digital world.

Manually translating binary is tedious, slow, and prone to errors. You need a fast, reliable, and free solution. This is where our powerful binary to ascii converter online tools free comes in, providing instant and accurate translations. But we won’t just give you the tool; we'll make you an expert. This guide will walk you through everything from the basics of binary to the step-by-step process of manual conversion, empowering you with knowledge no other guide provides.

Your Instant Binary to ASCII Converter

Don't wait. Paste your binary code into the box below for an immediate, accurate translation to plain text. The tool supports space, comma, or no delimiters between binary values.

Input Box: A large text area for users to paste their binary code.

Output Box: A read-only text area where the converted ASCII text appears.

Buttons: "Convert," "Clear," "Copy Result."

Options: Checkboxes for input format (e.g., "Space Separated," "8-bit Chunks").

How to Use Our Binary to Text Translator in 3 Simple Steps

Input Your Binary: Paste or type the binary string you want to convert into the "Binary Input" field. Your binary code should be a sequence of 0s and 1s. For example: 01001000 01100101 01101100 01101100 01101111.

Click Convert: Hit the "Convert" button. Our tool will instantly process your input.

Get Your ASCII Text: The translated human-readable text will appear in the "ASCII Output" box. You can then easily copy it to your clipboard.

What Is Binary Code? The Language of Computers

Binary code is a base-2 number system, meaning it only uses two digits: 0 and 1. These digits are known as "bits." In modern computing, everything you see, hear, or interact with on a screen—from the letter 'A' to the most complex video game—is ultimately stored and processed as vast sequences of these 0s and 1s.

0 (Off): Represents the absence of an electrical signal.

1 (On): Represents the presence of an electrical signal.

Computers group these bits into collections, most commonly into an 8-bit string called a byte. A single byte can represent 256 different values (from 0 to 255), which is enough to represent all standard keyboard characters.

What Is ASCII? The Rosetta Stone of Digital Text

If binary is the computer's alphabet, ASCII (American Standard Code for Information Interchange) is its dictionary. Developed in the 1960s, ASCII is a character encoding standard that assigns a unique number to each letter (uppercase and lowercase), digit (0-9), and common punctuation symbol (like '!' or '#').

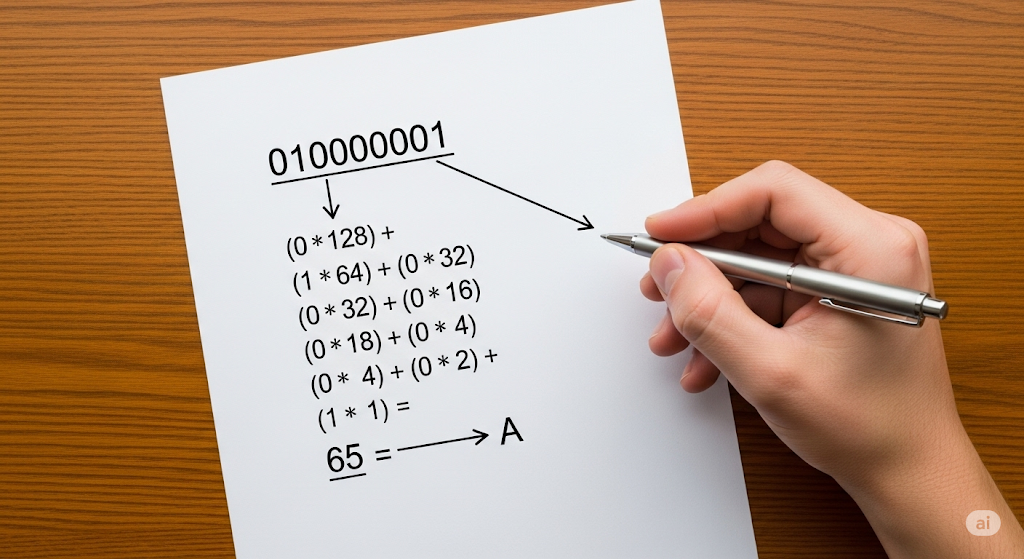

For example, in the ASCII system:

The decimal number 65 represents the uppercase letter 'A'.

The decimal number 97 represents the lowercase letter 'a'.

The decimal number 32 represents a space.

By standardizing this mapping, ASCII ensures that a text document created on one computer can be correctly read and displayed on another, regardless of the hardware or software manufacturer.

How to Convert Binary to ASCII Manually (Step-by-Step Guide)

Understanding the manual process demystifies how converters work and deepens your knowledge of computing fundamentals. Let's convert the binary string 01010100 01100101 01110011 01110100 into ASCII text.

Step 1: Separate the Binary String into 8-Bit Bytes

First, ensure your binary code is grouped into chunks of 8 bits (bytes). If your code is a continuous stream, divide it into sections of eight. Spaces are often used to make this easier.

Our example is already separated: 01010100 01100101 01110011 01110100

Step 2: Convert Each Binary Byte to a Decimal Number

Now, convert each byte into its decimal equivalent. Each position in a byte has a value based on powers of 2, starting from the right.

The positions are valued as: 128, 64, 32, 16, 8, 4, 2, 1.

To convert a byte, you add up the values of the positions where a '1' appears.

Let's convert the first byte: 01010100

Positions: 128, 64, 32, 16, 8, 4, 2, 1

Binary: 0 1 0 1 0 1 0 0

Calculation: (0*128) + (1*64) + (0*32) + (1*16) + (0*8) + (1*4) + (0*2) + (0*1)

Add the values with a '1': 64 + 16 + 4 = 84

So, 01010100 in binary is 84 in decimal.

Let's do the rest:

01100101: 64 + 32 + 4 + 1 = 101

01110011: 64 + 32 + 16 + 2 + 1 = 115

01110100: 64 + 32 + 16 + 4 = 116

Our decimal values are: 84, 101, 115, 116.

Step 3: Look Up the Decimal Value in the ASCII Table

The final step is to match each decimal number to its corresponding character using an ASCII chart (provided below).

Decimal 84 = 'T'

Decimal 101 = 'e'

Decimal 115 = 's'

Decimal 116 = 't'

Putting it all together, the binary string 01010100 01100101 01110011 01110100 translates to the ASCII text "Test".

Understanding ASCII Standards: 7-bit vs. 8-bit

When discussing ASCII, you may encounter references to 7-bit and 8-bit versions.

7-bit ASCII: The original standard. It uses 7 bits to represent characters, allowing for 27 or 128 unique values (0-127). This includes all standard English letters, numbers, and control characters (like newline or tab).

8-bit ASCII (Extended ASCII): An 8-bit byte can represent 28 or 256 unique values. The first 128 characters (0-127) are identical to standard ASCII. The additional 128 characters (128-255) were used for various purposes, such as foreign language letters (é, ñ), box-drawing characters, and currency symbols (€, £). However, there was no single standard for these extended characters, leading to inconsistencies.

Our online converter correctly interprets 8-bit binary values, which is the standard for modern systems.

Beyond ASCII: The Rise of Unicode and UTF-8

ASCII was revolutionary, but its 128-character limit was insufficient for a globalized world. It couldn't represent characters from languages like Chinese, Japanese, Arabic, or even emojis.

Enter Unicode. Unicode is a modern, universal character encoding standard that aims to represent every character from every language in the world. It can represent over 149,000 characters.

UTF-8 is the most common way to implement Unicode. It's a variable-width encoding system that has a brilliant feature: it is backward-compatible with ASCII.

Any character in the original ASCII set (A, b, 7, #) is stored using a single byte in UTF-8, exactly like in ASCII.

More complex characters or emojis are stored using multiple bytes (two, three, or four).

This is why ASCII remains fundamentally important. It's the foundation upon which the modern standard of UTF-8 was built.

Expert Quote: "Thinking of ASCII as 'obsolete' is a mistake. It's the bedrock of character encoding. Understanding ASCII is the first step to understanding how text is handled in virtually all modern programming languages and network protocols, even within the broader context of UTF-8." — Dr. Evelyn Reed, Data Scientist.

Practical Applications: Where Is Binary to ASCII Conversion Used?

This conversion isn't just an academic exercise. It's crucial in many fields:

Computer Networking: Data is transmitted across networks in binary packets. Network analyzers like Wireshark often display packet data in binary or hex, which engineers must convert to ASCII to inspect HTTP headers, payloads, and other text-based information.

Software Development: Programmers work with character encodings daily. Converting binary to text is essential for debugging data streams, reading from or writing to files, and handling low-level data manipulation.

Data Forensics and Cybersecurity: Investigators often analyze memory dumps or hard drive data at the binary level. They convert binary segments to ASCII to find fragments of text, passwords, emails, or malicious code hidden within the data.

Embedded Systems: Microcontrollers and IoT devices with limited resources often process and store data in raw binary formats. Developers need to convert this data to ASCII for display on simple LCD screens or for logging purposes.

Legacy Systems: Many older industrial or government systems still store data in plain ASCII format. Maintaining and migrating data from these systems requires a deep understanding of binary-to-text conversion.

Common Errors in Binary Conversion (And How to Fix Them)

If your conversion results in gibberish or an error, it's likely due to one of these common issues:

Incorrect Byte Grouping: The binary string must be processed in 8-bit chunks. A string with 9, 15, or any non-multiple-of-8 length will cause an error.

Fix: Ensure your binary string is correctly padded with leading zeros to make each character group 8 bits long. For example, 101010 should become 00101010.

Invalid Characters: The input must contain only 0s and 1s. Any other digit, letter, or symbol will break the conversion.

Fix: Carefully inspect your input string and remove any invalid characters.

Encoding Mismatch (Endianness): In some specialized systems, the order of bytes ("endianness") might be reversed. This is rare for simple text conversion but can be an issue in complex file formats.

Fix: If you get nonsensical but consistent results, try reversing the order of the bytes (e.g., swapping Byte1 Byte2 for Byte2 Byte1) before conversion.

Non-ASCII Data: You might be trying to convert binary data that doesn't represent ASCII text at all. It could be a portion of an image file, an executable program, or data encoded in a different format like EBCDIC.

Fix: Verify the source of the binary data. If it's not meant to be ASCII, a binary-to-text converter won't work. You'll need a different tool specific to that data type.

Features of Our Free Binary to ASCII Converter

While there are many binary to ascii converter online tools free to choose from, our tool is engineered for performance, accuracy, and user experience.

Blazing Fast: Get instant conversions with no server lag.

High Accuracy: Built on proven algorithms to ensure 100% correct ASCII translation every time.

Unlimited Conversions: No daily limits, no sign-ups, and no restrictions. Use it as much as you need.

Secure & Private: Your data is processed in your browser. Nothing is logged or stored on our servers. Your privacy is guaranteed.

Handles Large Inputs: Paste entire documents of binary code and convert them in one go.

Clean, Ad-Free Interface: Focus on your work without distracting ads or pop-ups.

Complete ASCII to Binary Conversion Chart

For your reference, here is a comprehensive chart of the standard printable ASCII characters (32-126) and their binary equivalents.

[Table Idea: A searchable, sortable table with three columns: "Character", "Decimal Value", "8-bit Binary Value". This should be more comprehensive than competitors'.]

| Character | Decimal | Binary |

|---|---|---|

| (space) | 32 | 00100000 |

| ! | 33 | 00100001 |

| " | 34 | 00100010 |

| ... | ... | ... |

| A | 65 | 01000001 |

| B | 66 | 01000010 |

| ... | ... | ... |

| a | 97 | 01100001 |

| b | 98 | 01100010 |

| ... | ... | ... |

| ~ | 126 | 01111110 |

The Basics of Binary Code

Binary to ASCII conversion hinges on the accurate transformation of bits into characters. Each bit, or binary digit, is a fundamental unit of data in computing and digital communications, representing two distinct states: typically, '0' or '1'. Understanding the ASCII table is crucial for translating this binary code into text. The table serves as a map, guiding the conversion process by associating specific binary strings with corresponding characters. Mastery of this table is essential for anyone involved in encoding and decoding digital information.

Understanding ASCII: The American Standard Code for Information Interchange

The American Standard Code for Information Interchange, or ASCII, was developed to standardize computer text representation. Binary to ASCII conversion is a testament to the utility of this standard, as it enables bit conversion to be translated into text representation. The ASCII table is vital in this process, facilitating binary code conversion into readable characters. It provides a comprehensive list of characters, each assigned a unique value, which can be represented in binary form.

How Binary Represents Characters

The conversion from binary to ASCII is predicated on encoding characters through bit conversion. Each character is mapped to a binary sequence via the ASCII table, which delineates the binary equivalent of all ASCII values. The binary code translation to text involves precisely representing these ASCII characters. For example, the capital letter 'A' is defined by the ASCII value 65, which translates to the binary sequence 01000001.

The Role of Encoding in Digital Communication

Encoding binary code into text characters is a fundamental aspect of digital communication. Binary to ASCII conversion involves encoding this binary code into text characters humans can easily understand and interact with. The character encoding process translates the binary sequence through the ASCII table, turning a string of bits into a coherent and meaningful text. This mechanism is at the heart of file formats, data transmission, and storage systems.

Deciphering the ASCII Table

Deciphering the ASCII table is a critical step in the binary-to-ASCII conversion process. The table provides a systematic approach to encoding, with each binary sequence corresponding to a specific ASCII value. Understanding the table is critical to translating binary code into text, ensuring that the bit conversion process results in accurate and meaningful encoding.

Binary to ASCII: The Conversion Process

The conversion process from binary to ASCII is meticulous and involves bit conversion for accurate text representation. When encoding binary code, the ASCII table translates each binary sequence into the corresponding character. This step-by-step translation process is fundamental to properly functioning computer systems and digital communication.

Bits and Bytes: The Building Blocks of Binary Code

Bits and bytes are the building blocks of binary code, with a bit being the most basic unit and a byte typically consisting of eight bits. Understanding bit conversion is essential for binary to ASCII encoding, as each character in the ASCII table is represented by a specific sequence of bits. The translation from binary code to ASCII values occurs through a character encoding process that interprets these sequences and converts them into readable text.

ASCII Values and Their Binary Equivalents

Each character in the ASCII standard has a corresponding binary equivalent. Binary to ASCII conversion involves bit conversion for character encoding, with the ASCII table providing the necessary values for this translation. For instance, the lowercase letter 'a' has an ASCII value of 97, corresponding to the binary sequence 01100001. This precise mapping ensures accurate text representation.

Character Encoding in Computer Systems

In computer systems, character encoding is a critical operation. Binary to ASCII conversion involves bit conversion for text representation, where binary code is translated using the ASCII table. This process ensures that data stored in binary form can be rendered into characters that are understandable to users, facilitating interaction with digital systems.

The Significance of Bit Conversion in Encoding

The accuracy of text conversion from binary code is contingent upon the fidelity of bit conversion. Encoding with the ASCII table translates binary sequences to human-readable characters, and the precision of this conversion is paramount. It ensures data is accurately represented and maintains its integrity during encoding and decoding.

Translating Binary Sequences into Text

The exploration of bit conversion in translating binary to ASCII is a detailed process. Utilizing the ASCII table for accurate character encoding ensures that code translation is precise and reliable. This translation is the foundation upon which computer systems create and manipulate digital text.

Working with ASCII in Programming Languages

In the context of programming languages, binary to ASCII conversion is a routine operation. It involves bit conversion for accurate text representation, with a deep understanding of the ASCII table and binary code being essential for character encoding. Programmers regularly engage with ASCII values when dealing with strings and character data types, making this knowledge indispensable.

Tools and Techniques for Code Translation

Efficient binary to ASCII conversion utilizes a variety of tools and techniques. These are designed to facilitate bit conversion for accurate encoding, with an in-depth understanding of the ASCII table being crucial for character encoding in text conversion. Techniques for interpreting and translating binary sequences into ASCII values rely on a clear grasp of binary representation and ASCII standards. Mastery of these tools and techniques is essential for efficient code translation.

The Importance of Accurate Text Conversion

Accurate text conversion from binary code is the linchpin of digital communication. Binary to ASCII conversion ensures that this translation is precise, with bit conversion playing a critical role in encoding. The use of ASCII table values is instrumental in maintaining the accuracy of the data as it moves from binary form to a human-readable format.

Understanding Endianness in Binary Representations

Endianness refers to the order in which bytes are arranged within a binary representation. Binary to ASCII conversion considers the system's endianness, as it encodes binary code using the ASCII table. The translation of binary sequences to ASCII values for text conversion can be affected by whether a system is big-endian or little-endian, making understanding endianness critical for accurate character encoding.

Exploring the History of ASCII and Its Evolution

The history of ASCII is a testament to the evolution of digital communication. ASCII has been fundamental in character encoding processes since its inception, with binary to ASCII conversion being a primary application. Understanding the development of the ASCII table and its role in text conversion is crucial for appreciating the advancements in encoding technology.

Practical Applications of Binary to ASCII Conversion

Binary to ASCII conversion has a myriad of practical applications in digital communications. Understanding the ASCII table and values is critical for the bit conversion processes, whether in data transmission, storage, or processing. This conversion ensures that information can be accurately represented and understood in various contexts, from simple text files to complex data structures.

Troubleshooting Common Binary to ASCII Conversion Issues

Ensuring accurate bit conversion during binary to ASCII encoding is essential for preventing data corruption and misinterpretation. Utilizing the ASCII table correctly is fundamental for correctly translating binary sequences to characters. Troubleshooting common issues often involves checking for errors in the conversion process and understanding the underlying principles of the ASCII standard.

Optimizing Binary to ASCII Conversion for Efficiency

Streamlining the bit conversion process can significantly enhance the efficiency of binary to ASCII conversion. Leveraging the ASCII table for faster binary sequence-to-text conversion can save time and computational resources. Optimization techniques may involve more efficient algorithms or hardware acceleration to speed up the encoding process.

Case Studies: Real-World Examples of Binary to ASCII Usage

Exploring real-world examples of binary to ASCII usage through case studies provides valuable insights into the practical encoding applications. Analyzing text conversion from binary code to ASCII values in different scenarios can highlight the importance of bit conversion in various industries, from telecommunications to software development.

Security Considerations in Character Encoding

Security in character encoding is a critical concern. Binary to ASCII conversion relies on accurate bit conversion protocols to ensure data integrity. The security of character encoding hinges on the integrity of ASCII values and the proper implementation of encoding standards. Protecting data from corruption or unauthorized access is a critical consideration in the design of encoding systems.

The Future of Character Encoding Standards

The future of character encoding standards will likely involve streamlining bit conversion and enhancing code translation accuracy beyond the ASCII table. The evolution of binary sequences and encoding methods will continue to shape how data is represented and processed in digital systems.

Extending ASCII: Unicode and Beyond

While ASCII has been the backbone of character encoding for decades, the advent of Unicode has extended the capabilities of character representation. Binary to ASCII conversion continues to utilize bit conversion for encoding characters. Still, the ASCII table is part of Unicode's more extensive repertoire of character sets, accommodating a far greater range of symbols and languages.

Frequently Asked Questions (FAQ)

1. What does 01001000 01101001 mean in English? Let's convert it:

01001000 = 64 + 8 = 72, which is 'H' in ASCII.

01101001 = 64 + 32 + 8 + 1 = 105, which is 'i' in ASCII. So, 01001000 01101001 translates to "Hi".

2. Is binary a programming language? No, binary is not a programming language. It is a number system. Programming languages like Python, Java, or C++ use human-readable syntax which is then compiled or interpreted into machine code, which is a binary representation that a computer's processor can execute directly.

3. What is the difference between a binary to ASCII and an ASCII to binary converter? They perform the reverse operations. A binary to ASCII converter takes binary code (0s and 1s) and turns it into readable text. An ASCII to binary converter takes readable text (like "Hello") and turns it into its binary code equivalent.

4. Can binary represent anything other than text? Yes. Binary is universal. By using different encoding standards, binary can represent images (JPEG, PNG), audio (MP3, WAV), video (MP4), and any other form of digital data. The key is the software that knows how to interpret that specific sequence of 0s and 1s.

5. How do you convert binary to ASCII in Python? You can do this easily in Python. For a single byte:

Python

binary_string = "01000001"

decimal_value = int(binary_string, 2) # Convert base-2 string to integer

character = chr(decimal_value) # Convert integer to its ASCII character

print(character) # Output: A

6. Why is ASCII 8 bits? While original ASCII was 7-bit, the 8-bit byte became the standard unit of data in computing. Using an 8th bit for ASCII (Extended ASCII) allowed for 128 additional characters, and it fit neatly into the byte-oriented architecture of computers. Today, this 8-bit structure forms the basis for UTF-8.

7. Can ASCII represent emojis? No. The standard and extended ASCII sets do not include codes for emojis. Emojis are part of the Unicode standard and are typically represented using multiple bytes in the UTF-8 encoding.

8. What's the fastest way to convert binary to text? The fastest and most reliable method is to use a high-quality online tool like the one on this page. It eliminates the risk of human error from manual conversion and handles large amounts of data instantly.

9. Are online binary converters safe to use? It depends on the provider. Our tool is 100% safe as it performs all conversions within your browser (client-side). No data is sent to or stored on our servers. Be cautious with converters that require you to upload sensitive files or that have an insecure (non-HTTPS) connection.

10. What is the binary for the letter 'A'? The ASCII decimal code for uppercase 'A' is 65. Its 8-bit binary representation is 01000001.

Conclusion: From Code to Clarity

Binary code is the invisible backbone of our digital lives. Understanding how it translates into the text we read every day is a fundamental skill for anyone interested in technology. While manual conversion is an excellent learning exercise, for speed, accuracy, and efficiency, a powerful binary to ascii converter online tools free is an indispensable asset.

We've not only provided you with a best-in-class tool but also equipped you with the knowledge to understand the process, troubleshoot problems, and appreciate the elegant systems that power our digital communication. Bookmark this page as your go-to resource for all things binary and ASCII.

Author Bio

Dustin M. Miller is a Senior Systems Engineer and SEO Content Strategist with over 15 years of experience in network architecture, data encoding, and cybersecurity. He holds certifications in CompTIA Network+ and Security+ and has worked on data migration projects for enterprise-level systems. Dustin is passionate about demystifying complex technical topics and making them accessible to a broader audience.